Identifying Bot Traffic

Bot traffic is defined as non-human traffic to your website from spiders and robots that seems like legitimate traffic but is really spam. This low-quality traffic can interfere with your data and mess up your ability to make well-informed decisions for your business.

If you start to notice unexpected spikes in your web traffic, you may be having problems with bot traffic. These unexpected spikes often come from referral sources. To see if your referral traffic data seems legitimate, you can review your referral sources in Google Analytics.

Once in Google Analytics, first, you will want to see if you have a lot of referral sources that don’t look relevant to your website. This is the first indication that you may have bot traffic on your website. Next, you will want to look at the bounce rate and average visit duration. If you have visits that show 100 percent bounce rate with an average visit duration of 00:00:00, you might be suspicious that the traffic is legitimate.

So, why do bots exist? Good bots help Google and other search engines crawl web pages to place them on the search engine results pages correctly. Bad bots which account for 29 percent of all web traffic according to an Incapsula study crawl content for a number of malicious reasons. The evil bots may be crawling websites to attack web servers, steal data or content, or increase the costs for website owners.

How Can You Eliminate Bot Traffic?

When it comes to getting rid of bot traffic, with just a few steps, you can combat these annoying data disruptors.

1. Check the Bot Filter Box in Admin View Settings

When you are in the Admin View Settings area of Google Analytics, there is a box that you can check to filter out data from known bots and spiders. Sine the option was made available in 2014, many websites have been successful in removing at least a portion of bot traffic with the click of a single button.

This handy little button allows you to exclude bots and spiders that are included on the IAB/ABC International Spiders & Bots List, which is a list that you would have to pay for to see individual names. While you won’t know for sure which bots and spiders are included without paying for the list which can cost up to $14,000 for non-IAB members you will keep them from affecting your referral traffic data.

2. Use IP Addresses to Block Bots

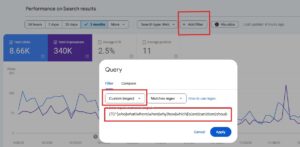

Even though you can’t see IP addresses in your Google Analytics reports, you can still block them using Google Analytics View Filters. Go to Google Tag Manager and use dataLayer. Follow Luna Metric’s Jon Meck’s instructions for blocking IP addresses.

Blocking bot traffic can help you free up your hits for real traffic visits in Google Analytics. You can also completely block bots from even visiting your website, which could prevent spamming or DDoS attacks. To do this, you would need to edit your site’s .htaccess file to block IP addresses from loading web resources with this handy tool.

3. Get Rid of Bots by User Agent

Since bots can switch between IP addresses and be difficult to identify, there are ways for them to bypass the previous two steps on this list. This is where Custom Dimensions come to play. In Google Tag Manager, you can add a Custom Dimension for each of your visitors’ User Agents. Then you just need to exclude those sessions that you know are from bots and create a new Variable with the value navigator.userAgent. Lastly, you just need to populate your Google Analytics Pageview Tag with the Variable slot with the Index from earlier and enter the Variable {{User Agent}} for the Value.

After a couple of days, you can check back in Google Analytics to see which User Agents act like bots and use filters to eliminate them.

4. Add in a CAPTCHA Requirement

CAPTCHA is a great option when everything else we’ve mentioned so far just doesn’t seem to be enough. Adding a CAPTCHA to your website will require users to follow simple instructions to verify that they are human. In most cases, CAPTCHAs are only required on the first visit to the website as cookies track them.

A CAPTCHA can request that users click a button, answer a basic math problem, select specific images, or identify a hidden image. These simple tasks are difficult for most bots, but as bots become more and more advanced, CAPTCHA requires will continue to evolve to elude bots better.

5. Ask for Personal Information

After a CAPTCHA has been successfully completed, you can ask users to fill out a form that includes a valid email address. Your users will then need to open an email and click a link to verify their email address before they can access the website.

Similarly, you can ask that your users enter their mobile phone number, which will then receive a verification code that they can type into the webpage to be granted access.

At this point, you should have eliminated nearly all bot traffic to your website. Sophisticated bots may still be able to pass through all of these new security measures, but most bots will be foiled, and you can give your clients accurate data about the traffic on their websites.

The experts at Rank Fuse would be happy to help you eliminate bot traffic to your website. If you are looking for a digital marketing company to help your company, give Rank Fuse Digital Marketing a call today at 913-703-7265 or contact us.